Recent advancements in Virtual Reality (VR) and Augmented Reality (AR) technologies have led to wider adoption of cutting-edge tools that have become increasingly vital across industries such as medicine, education, architecture, and gaming. VR is the digitally generated re-creation of realistic environments, allowing users to feel as though they are immersed in virtual surroundings, while AR uses the real world as a framework within which objects, images and other virtual items are placed.

While both technologies are forms of mixed reality, their convergence can create environments in which VR incorporates elements of AR to build interactive experiences integrating the real world with the digital, offering up a host of potential applications. The space industry is investigating the potential for this technology to assist with space operations and change the way humans explore the solar system.

An example of this potential is The Aerospace Corporation’s patent-pending Project Phantom prototype, which employs VR and AR to allow scientists and engineers (aka “Phantoms”) to investigate digitized 3D renderings of extraterrestrial terrains obtained by crew members in the field (aka “Explorers”), enabling deeper analysis and collaborative research.

“Project Phantom connects the real and virtual worlds together, so we can bring the scientific world into the operational space for astronauts on the Moon or Mars,” said Trevor Jahn, Engineering Specialist in the Space Architecture Department. “Although turnaround time for communication is a factor, it’s arguably an improved form of bi-directional communication between astronauts or users on location and whoever is accessing the virtual model. Its potential is undeniable.”

From VR to AR and Back Again

Project Phantom leverages VR and AR to enable a digital ecosystem for scientists to engage and collaborate with their mission counterparts on the ground, providing users with the ability to communicate and exchange information through a variety of means.

Using this approach, the scientific community can be provided a 3D model of the Moon or Mars that can be explored in a VR environment. These scientists can annotate their findings digitally and these annotations appear as two-dimensional, holographic sticky notes into which they can add voice memos and text using a virtual keyboard or speech-to-text. Explorers on the Moon or Mars can see these annotations using AR capabilities integrated into their space suits or vehicles, and then physically access these annotated locations, interact with them, make edits to or create their own annotations which can then be accessed by the scientific community in VR later on. Furthermore, the fidelity of the VR environment the Phantoms explore can continuously be improved as new data collected by Explorers is added to the rendering.

The technology could also provide other benefits, such as location finding without the use of GPS. In addition, the technology allows Explorers to chart paths by stringing together annotations as virtual waypoints, and to use a virtual compass to direct the Explorers to a target destination.

Photos On the Go: Leveraging Current Drone and Rover Technology

Project Phantom leverages the combination of VR, AR and photogrammetry technologies in an integrated manner that differentiates it from other currently available technologies. The AR technology of the existing generation primarily overlays images and models onto environments but doesn’t offer Project Phantom’s feedback loop capability. While VR technology readily enables users to explore “digital twin” landscapes, it’s limited with no means to pass data back to the real world as annotations or otherwise.

Another advantage of Project Phantom is its ability to facilitate and improve terrain mapping and space exploration planning thanks to the robust and versatile back-and-forth communication platform it provides Explorers and Phantoms. With regards to an eventual mission to Mars, Jahn envisions the potential of the platform’s enhanced communications functions to improve terrain mapping derived from drones, rovers and technology such as photogrammetry.

With photogrammetry, multiple overlapping images are captured, enabling scientists to calculate the depth of different objects within an image and create a 3D model. Cameras can also be embedded on space suits, habitats, rovers and elsewhere as astronauts explore the surface of the Moon, Mars or anywhere else. The ability to compile all the information from those cameras and integrate it with other types of inputs, such as data captured by LiDAR and other technologies, can render an even higher-fidelity 3D model of the operational space in VR.

Testing the Prototype

To develop Project Phantom, Jahn collaborated with Aerospace’s Immersive Tech and Animation Studio, working directly with engineers Austin Baird to develop the underlying applications, and Evan Cooper to leverage photogrammetry for the 3D models.

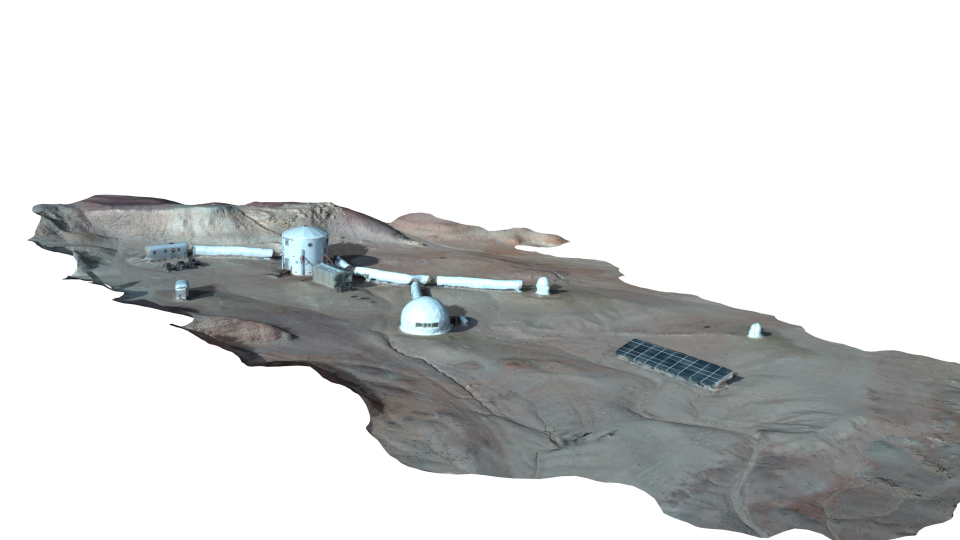

In late 2022, Jahn was able to field-test Project Phantom’s photogrammetry capabilities as a member of the first all-Aerospace crewed analog mission at the Mars Desert Research Station (MDRS). From his vantage point in the remote Utah desert, he used a rover and a drone to document the MDRS complex and surrounding landscape. With a communications time-delay in place to simulate the distance between Earth and Mars, Jahn then uploaded those images to share with the team working in Aerospace’s El Segundo laboratories to generate a 3D model of the complex. The team also demonstrated the ability to improve existing 3D model fidelity using various photogrammetry techniques.

“By the end of the simulation, we had a 3D model calibrated to the area and showed we can do it with photogrammetry models,” said Jahn. “I also got to experience issues that might face someone using augmented reality in the future on the Moon or Mars. All of these things culminated in us making leaps and bounds without being able to meet in person or exchange information in real time.”

In addition to MDRS, Aerospace has demonstrated the technology at the Planetary Analog Test Site at Johnson Space Center in Houston, also known as the Rock Yard – a physical approximation of the lunar surface used over the years to test a variety of robots and rovers.

Implications for Future Human Spaceflight

As the technology continues to evolve rapidly, new capabilities can continue to augment and shape the future of space exploration. Aerospace’s prototype is designed to eventually incorporate holograms as well as 3D objects along with animation, further expanding its potential for space and commercial applications.

Jahn anticipates further evolution of this technology to more accurately reflect the real world by enabling digital representations of the model-based simulations to calculate collision mechanics. This would, in effect, create mixed reality environments in which digital and real elements adhere to the same laws of physics. Greater accuracy in capturing features and terrains adds the benefit of risk mitigation. A phone-based Project Phantom app has also been created with comparable functionality to its headset counterpart, but with the advantage of a physical interface. This app can also incorporate simple 3D models and animated holograms, which the user can place in the real world.

“I’m envisioning an ultimate form of AR, in which you have a 3D model, the real world, and have holograms that interact with it and obey the laws of physics and don't pass through other objects. You could introduce artificial intelligence into these 3D objects and establish rules for how they can navigate beyond just the laws of physics,” said Jahn. “Given where we are today with Project Phantom, it could open up a world of possibilities, and I’m very excited for how it's postured us.”